Mitigating Bias in Machine Learning

Part I Introduction

Welcome to Mitigating Bias in Machine Learning. If you’ve made it here chances are you’ve worked with models and have some awareness of the problem of biased machine learning algorithms. You might be a student with a foundational course in machine learning under your belt, or a Data Scientist or Machine Learning Engineer, concerned about the impact your models might have on the world.

In this book we are going to learn and analyse a whole host of techniques for measuring and mitigating bias in machine learning models. We’re going to compare them, in order to understand their strengths and weaknesses. Mathematics is an important part of modelling, and we won’t shy away from it. Where possible, we will aim to take a mathematically rigorous approach to answering questions.

Mathematics, just like code, can contain bugs. In this book, each has been used to verify the other. The analysis in this book, was completed using Python. The Jupyter Notebooks are available on GitHub, for those who would like to see/use them. That said, this book is intended to be self contained, and does not contain code. We will focus on the concepts, rather than the implementation.

Mitigating Bias in Machine Learning is ultimately about fairness. The goal of this book is to understand how we, as practising model developers, might build fairer predictive systems and avoid causing harm (sometimes that might mean not building something at all). There are many facets to solving a problem like this, not all of them involve equations and code. The first two chapters (part I) are dedicated to discussing these.

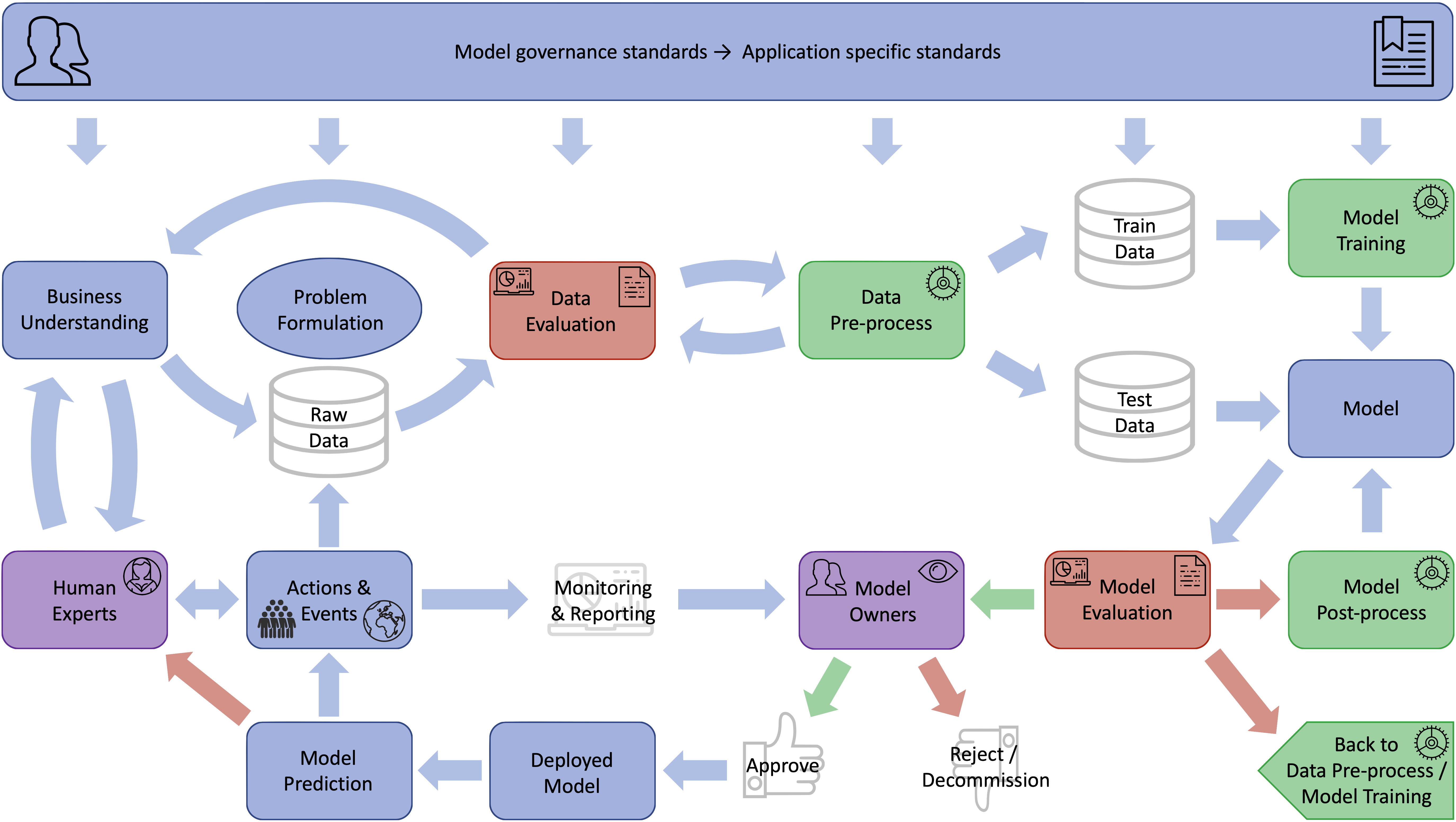

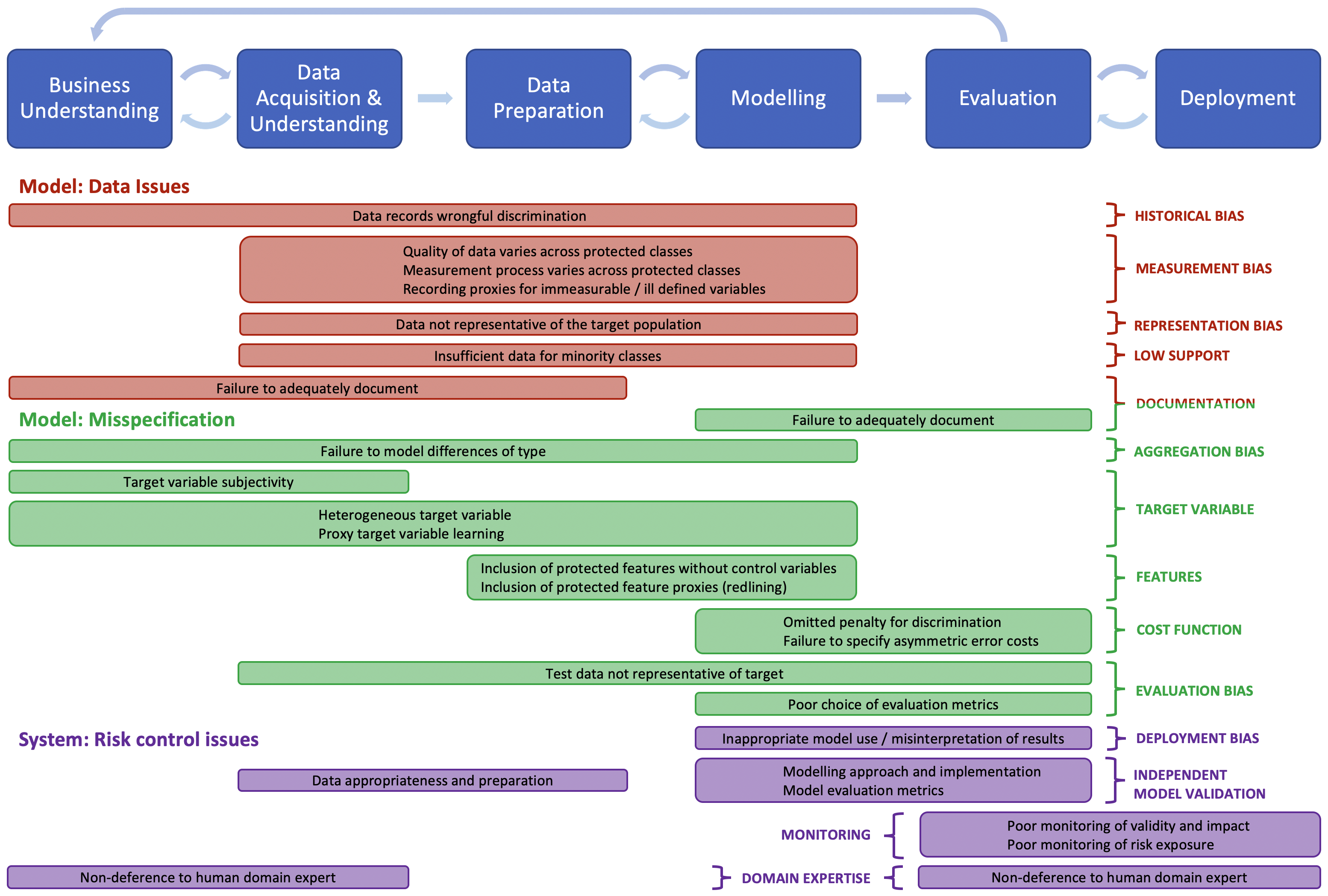

In a sense, over the course of the book, we will zoom in on the problem, or rather narrow our perspective. In chapter 1, we’ll discuss philosophical, political, legal, technical and social perspectives. In chapter two we take a more practical view on the problem of ethical development (how to build and organise the development of models, with a view to reducing ethical risk).

In part II we will talk about how we quantify different notions of fairness.

In part III, we will look at methods for mitigating bias through model interventions and analyse their impact.

Let’s get started.

1 Context

This chapter at a glance

Problems with machine learning in sociopolitical domains

Contrasting socio-political theories of fairness in decision systems

The history, application and interpretation of anti-discrimination law

Association paradoxes and the difficulty in identifying bias

The different types of harm caused by biased systems

The goal of this chapter is to shed light on the problem of bias in machine learning, from a variety of different perspectives. The word bias can mean many things but in this book, we use it interchangeably with the term unfairness. We’ll talk about why later.

Perhaps the biggest challenge in developing sociotechnical systems is that it inevitably involve questions which are social, philosophical, political, and legal in nature; questions to which there is often no definitive answer but rather competing viewpoints and trade-offs to be made. As we’ll see, this does not change when we attempt to quantify the problem. There are many multiple definitions of fairness that have been proven to be impossible to satisfy simultaneously. The problem of bias in sociotechnical systems is very much an interdisciplinary one and, in this chapter, we discuss them as such. We will make connections between concepts and language from the various subjects over the course of this book.

In this chapter we shall discuss some philosophical theories of fairness in sociopolitical systems and consider how they might relate to model training and fairness criteria. We’ll take a legal perspective, looking at anti-discrimination laws in the US as an example. We’ll discuss some of the history behind and practical application of them; and the tensions that exist in their interpretation. Data can be misleading; correlation does not imply causation which is why domain knowledge in building sociotechnical systems is imperative. We will discuss the technical difficulty in identifying bias in static data through illustrative examples of Simpson’s paradox. Finally, we’ll discuss why it’s important to consider the fairness of automated systems. We’ll finish the chapter by discussing some of the different types of harm caused by biased machine learning systems, not just allocative but representational harms which are currently less well defined and potentially valuable research areas.

Let’s start by describing the types of problems we are interested in.

1.1 Bias in Machine Learning

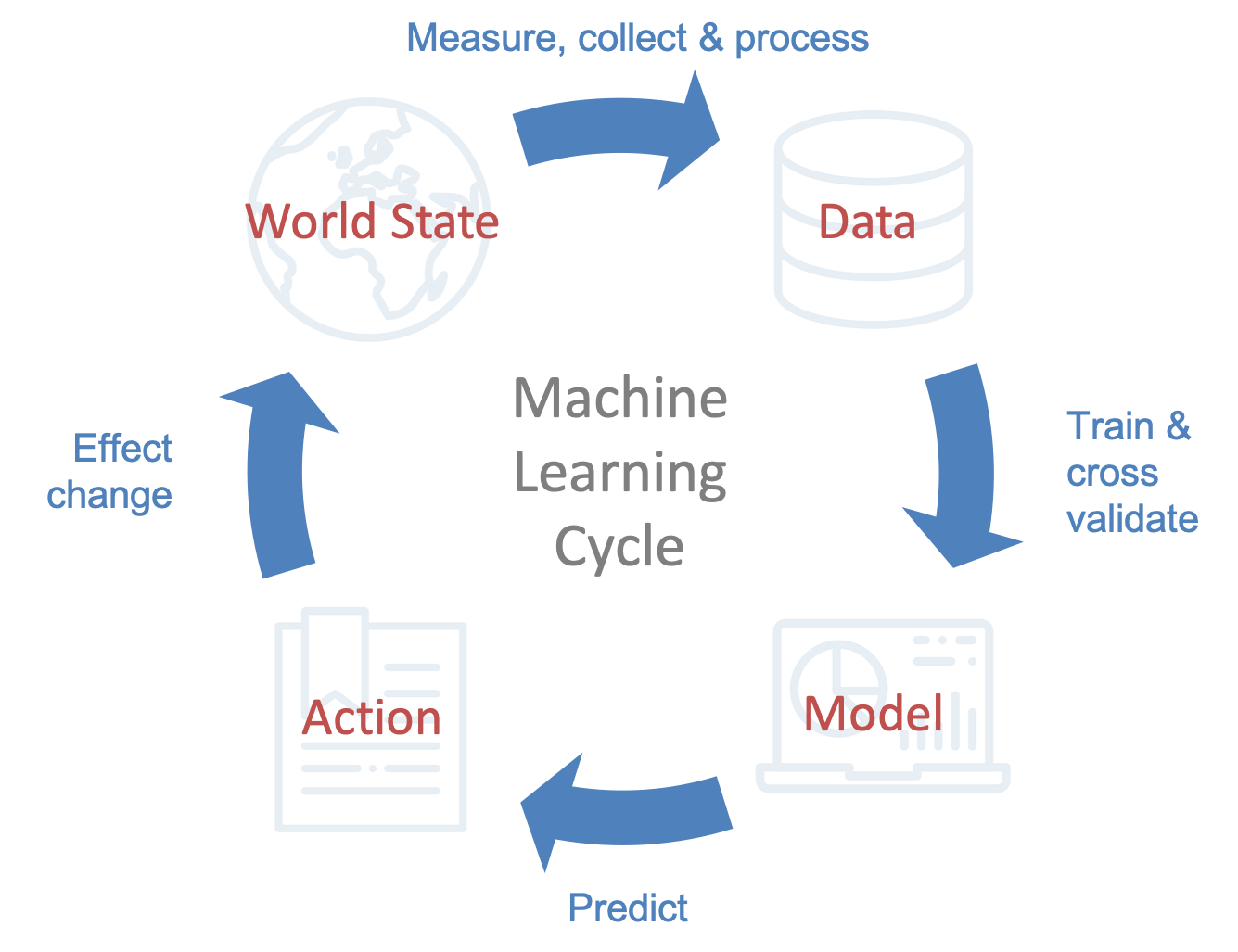

Machine learning can be described as the study of computer algorithms that improve with (or learn) experience. It can be broadly subdivided into the fields of supervised, unsupervised and reinforcement learning.

Supervised learning

For supervised learning problems, the experience come in the form of labelled training data. Given a set of features \(X\) and labels (or targets) \(Y\), we want to learn a function or mapping \(f\), such that \(Y = f(X)\), where \(f\) generalizes to previously unseen data.

Unsupervised learning

For unsupervised learning problems there are no labels \(Y\), only features \(X\). Instead we are interested in looking for patterns and structure in the data. For example, we might want to subdivide the data into clusters of points with similar (previously unknown) characteristics or we might want to reduce the dimensionality of the data (to be able to visualize it or simply to make a supervised learning algorithm more efficient). In other words, we are looking for a new feature \(Y\) and the mapping \(f\) from \(X\) to \(Y\).

Reinforcement learning

Reinforcement learning is concerned with the problem of optimally navigating a state space to reach a goal state. The problem is framed as an agent that takes actions, which result in rewards (or penalties). The task is then to maximize the cumulative reward. As with unsupervised learning, the agent is not given a set of examples of optimal actions in various states, but rather must learn them through trial and error. A key aspect of reinforcement learning is the existence of a trade-off between exploration (searching unexplored territory in the hope of finding a better choice) and exploitation (exploiting what has been learned so far).

In this we will focus on the first two categories (essentially algorithms that capture and or exploit patterns in data), primarily because these are the fields in which problems related to bias in machine learning are most pertinent (automation and prediction). As one would expect then, these are also the areas in which many of the technical developments in measuring and mitigating bias have been concentrated.

The idea that the kinds of technologies described above are learning is an interesting one. The analogy is clear, learning by example is certainly a way to learn. In less modern disciplines one might simply think of training a model as; solving an equation, interpolating data, or optimising model parameters. So where does the terminology come from? The term machine learning was coined by Arthur Samuel in the 1950’s when, at IBM, he developed an algorithm capable of playing draughts (checkers). By the mid 70’s his algorithm was competitive at amateur level. Though it was not called reinforcement learning at the time, the algorithm was one of the earliest implementations of such ideas. Samuel used the term rote learning to describe a memorisation technique he implemented where the machine remembered all the states it had visited and the corresponding reward function, in order to extend the search tree.

1.1.1 What is a Model?

Underlying every machine learning algorithm is a model (often several of them) and these have been around for millennia. Based on the discovery of palaeolithic tally sticks (animal bones carved with notches) it’s believed that humans have kept numerical records for over 40,000 years. The earliest mathematical models (from around 4,000 BC) were geometric and used to advance the fields of astronomy and architecture. By 2,000 BC, mathematical models were being used in an algorithmic manner to solve specific problems by at least three civilizations (Babylon, Egypt and India).

A model is a simplified representation of some real world phenomena. It is an expression of the relationship between things; a function or mapping which, given a set of input variables (features), returns a decision or prediction (target). A model can be determined with the help of data, but it need not be. It can simply express an opinion as to how things should be related.

If we have a model which represents a theoretical understanding of the world (under a series of simplifying assumptions) we can test it by measuring and comparing the results to reality. Based on the results we can assess how accurate our understanding of the world was and update our model accordingly. In this way, making simplifying assumptions can be a means to iteratively improve our understanding of the world. Models play an incredibly important role in the pursuit of knowledge. They have provided a mechanism to understand the world around us, and explain why things behave as they do; to prove that the earth could not be flat, explain why the stars move and shift in brightness as they do or, (somewhat) more recently in the case of my PhD, explain why supersonic flows behave uncharacteristically, when a shock wave encounters a vortex.

As the use of models has been adopted by industry, increasingly their purpose has been geared towards prediction and automation, as a way to monetize that knowledge. But the pursuit of profit inevitably creates conflicts of interests. If your goal is to learn more, finding out where your theory is wrong and fixing it is the goal. In business, less so. I recall a joke I heard at school describing how one could tell which field of science an experiment belonged to. If it changes colour, it’s biology; if it explodes, it’s chemistry and if it doesn’t work, it’s physics. Models of real world phenomena fail. They are, by their very nature, a reductive representation of an infinitely more complex real world system. Obtaining adequately rich and relevant data is a major limitation of machine learning models and yet, they are increasingly being applied to problems, where that kind of data simply doesn’t exist.

1.1.2 Sociotechnical systems

We use the term sociotechnical systems to describe systems that involve algorithms that manage people. They make efficient decisions for and about us, determine what we see, direct us and more. But managing large numbers of people inevitably exerts a level of authority and control. An extreme example is the adoption of just-in-time scheduling algorithms by large retailers in the US to manage staffing needs. To predict footfall, the algorithms take into account everything from weather forecasts to sporting events. The cost of this efficiency is passed onto employees. The number of hours allocated are optimised to fall short of qualifying for costly health insurance. Employees are subjected to haphazard schedules that prevent them from being able to prioritise anything other than work; eliminating the possibility of any opportunity that might enable them to advance beyond the low-wage work pool.

Progress in the field of deep learning combined with increased availability and decreased cost of computational resources has led to an explosion in data and model use. Automation seemingly offers a path to making our lives easier, improving the efficiency and efficacy of the many industries we transact with day to day; but there are growing and legitimate concerns over how the benefit (and cost) of these efficiencies are distributed. Machine learning is already being used to automate decisions in just about every aspect of modern life; deciding which adverts to show to whom, deciding which transactions might be fraud when we shop, deciding who is able to access to financial services such as loans and credit cards, determining our treatment when sick, filtering candidates for education and employment opportunities, in determining which neighbourhoods to police and even in the criminal justice system to decide what level bail should be set at, or the length of a given sentence. At almost every major life event, going to university, getting a job, buying a house, getting sick, decisions are being made by machines.

1.1.3 What Kind of Bias?

The word bias is rather overloaded; it has numerous different interpretations even within the same discipline. Let’s talk about the kinds of biases that are relevant here. The word bias is used to describe systematic errors in variable estimation (predictions) from data. If the goal is to create systems that work similarly well for all types of people, we certainly want to avoid these. In a social context, bias is spoken of as prejudice or discrimination in a given context, based on characteristics that we as a society deem to be unacceptable or unfair (for example hiring practices that systematically disadvantage women). Mitigating bias though is not just about avoiding discriminating, it can also manifest when a system fails to adequately discriminate based on characteristics that are relevant to the problem (for example systematically higher rates of error in visual recognition systems for darker skinned individuals). Systemic bias and discrimination are observed in data in numerous ways; historical decisions of course are susceptible, but more importantly perhaps in the very definition of the categories, who is recognised and who is erased. Bias need not be conscious, in reality it starts at the very inception of technology, in deciding which problems are worth solving in the first place. Bias exists in how we measure the cost and benefit of new technologies. For sociotechnical systems, these are all deeply intertwined.

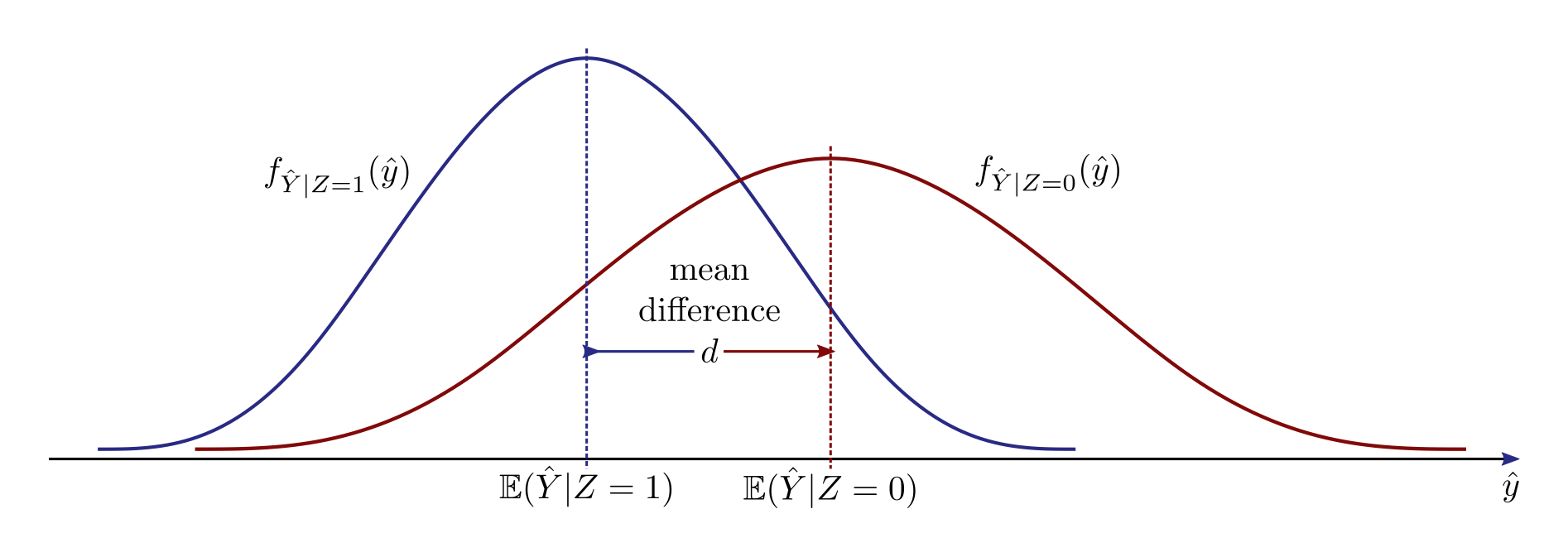

Ultimately, mitigating bias in our models is about fairness and in this book we shall use the terms interchangeably. Machine learning models are capable of not only of proliferating existing societal biases, but amplifying them, and are easily deployed at scale. But how do we even define fairness? And from whose perspective do we mean fair? The law can provide some context here. Laws, in many cases, define protected characteristics and domains (we’ll talk more about these later). We can potentially use these as a guide and we certainly have a responsibility to be law abiding citizens. A common approach historically has been to ignore protected characteristics. There’s a few reasons for this. One reason is the false belief that, an algorithm cannot discriminate based on features not included in the data. This assumption is is easy to disprove with a counter example. A reasonably fool-proof way to systematically discriminate by race or rather ethnicity (without explicitly using it), is to discriminate by location/residence; that is, another variable that’s strongly correlated and serves as a proxy. The legality of this practice depends on the domain. In truth, you don’t need a feature, or a proxy, to discriminate based on it, you just need enough data, to be able to predict it. If it is predictable, the information there and the algorithm is likely using it. Another reason for ignoring protected features is avoiding legal liability (we’ll talk more about this when we take a legal perspective later in the chapter).

Example: Amazon Prime same day delivery service

In 2016, analysis published by Bloomberg uncovered racial disparities in eligibility for Amazon’s same day delivery services for Prime customersTo be clear, the same day delivery was free for eligible Amazon Prime customers on sales exceeding $35. Amazon Prime members pay a fixed annual subscription fee, thus the disparity is in the level of service provided for Prime customers who are eligible verses those that are not.

[1]

[1] D. Ingold and S. Soper, “Amazon doesn’t consider the race of its customers. Should it?” Bloomberg, 2016.

. The study used census data to identify Black and White residents and plot the data points on city maps which simultaneously showed the areas that qualified for the Prime customer same day delivery. The disparities are glaring at a glance. In six major cities, New York, Boston, Atlanta, Chicago, Dallas, and Washington, DC where the service did not have broad coverage, it was mainly Black neighbourhoods that were ineligible. In the latter four cities, Black residents were about half as likely to live in neighbourhoods eligible for Amazon same-day delivery as White residents.

At the time Amazon’s process in determining which ZIP codes to serve was reportedly a cost benefit calculation that did not explicitly take race into account but for those who have seen redlining maps from the 1930’s is hard to not see the resemblance. Redlining was the (now illegal) practice of declining (or raising prices for) financial products to people based on the neighbourhood where they lived. Because neighbourhoods were racially segregated (a legacy that lives on today), public and private institutions were able to systematically exclude minority populations from the housing market and deny loans for house improvements without explicitly taking race into account. Between 1934 and 1962, the Federal Housing Administration distributed $120 billion in loans. Thanks to redlining, 98% of these went to White families.

Amazon is a private enterprise, and it is legally entitled to make decisions about where to offer services based on how profitable it is. Some might argue they have a right to be able to make those decisions. Amazon is not responsible for the injustices that created such racial disparities, but the reality is that such disparities in access to goods and services perpetuate it. If same-day delivery sounds like a luxury, it’s worth considering the context. The cities affected have a long histories of racial segregation and economic inequality resulting from systemic racism, now deemed illegal. They are neighbourhoods which to this day are underserved by brick and mortar retailers, where residents are forced to travel further and pay more for household essentials. Now we are in the midst of a pandemic, where once delivery of household goods used to be a luxury, with so many forced to quarantine, suddenly it’s become far more of a necessity. What we consider to be a necessity changes over time, it depends on where one lives, one’s circumstances and more. Finally, consider the scale of Amazon’s operations, in 2016 one third of retail e-commerce spending in the US was with Amazon (that number has since risen to almost 50%).

1.2 A Philosophical Perspective

Developing a model is not an objective scientific process, it involves making a series subjective choices. Cathy O’Neil describes them as “opinions embedded in code”. One of the most fundamental ways in which we impose our opinion on a machine learning model, is in deciding how we measure success. Let’s look at the process of training a model. We start with some parametric representation (a family of models), which you hope is sufficiently complex to be able to reflect the relationships between the variables in the data. The goal in training is to determine which model (in our chosen family) is best. The best model being the one that maximises it’s utility (from the model developers perspective).

For sociotechnical systems, our predictions don’t only impact the decision maker, they also result in a benefit (or harm) to those subjected to them. The very purpose of codifying a decision policy is often to cheaply deploy it at scale. The more people it processes, the more value there is in codifying the decision process. Another, way to look such models instead then, is as a system for distributing benefits (or harms) among a population. Given this, which model is the right one so to speak. In this section we briefly discuss some more philosophical theories relevant to these types of problems. We start with utilitarianism which is perhaps the easiest theory to draw parallels with in modelling.

1.2.1 Utilitarianism

Utilitarianism provides a framework for moral reasoning in decision making. Under this framework, the correct course of action, when faced with a dilemma, is the one that maximises the benefit for the greatest number of people. The doctrine demands that the benefits to all people are are counted equally. Variations of the theory have evolved over the years. Some differ in their notion of how benefits are understood. Others distinguish between the quality of various kinds of benefit. In a business context, one might consider it as financial benefit (and cost). Although, this in itself depends on one’s perspective. Some doctrines advocate that the impact of the action in isolation should be considered, while others ask what the impact would be if everyone in the population took the same actions.

There are some practical problems with utilitarianism as the sole guiding principle for decision making. How do we measure benefit? How do we navigate the complexities of placing a value on immeasurable and vastly different consequences? What is a life, time, money or particular emotion worth and how do we compare and aggregate them? How can one even be certain of the consequences? Longer term consequences are hard if not impossible to predict. Perhaps the most significant flaw in utilitarianism for moral reasoning, is the omission of justice as a consideration.

Utilitarian reasoning judges actions based solely on consequences, and aggregates them over a population. So, if an action that unjustly harms a minority group happens to be the one that maximises the aggregate benefit over a population, it is nevertheless the correct action to take. Under utilitarianism, theft or infidelity might be morally justified, if those it would harm are none the wiser. Or punishing an innocent person for a crime they did not commit could be justified, if it served to quell unrest among a population. For this reason it is widely accepted that utilitarianism is insufficient as a framework for decision making.

Utilitarianism is a flavour of consequentialism, a branch of ethical theory that holds that consequences are the yard stick against which we must judge the morality of our actions. In contrast deontological ethics judges the morality of actions against a set of rules that define our duties or obligations towards others. Here it is not the consequences of our actions that matter but rather intent.

The conception of utilitarianism is attributed to British philosopher Jeremy Bentham who authored the first major book on the topic An Introduction to the Principles of Morals and Legislation in 1780. In it Bentham argues that, it is the pursuit of pleasure and avoidance of pain alone that motivate individuals to act. Given this he saw utilitarianism as a principle by which to govern. Broadly speaking, the role of government, in his view, was to assign rewards or punishments to actions, in proportion to the happiness or suffering they produced among the governed. At the time, the idea that the well-being of all people should be counted equally, and that that morality of actions should be judged accordingly was revolutionary. Bentham was a progressive in his time, he advocated for women’s rights (to vote, hold office and divorce), decriminalisation of homosexual acts, prison reform and the abolition of slavery and more. He argued many of his beliefs as a simple economic calculation of how much happiness they would produce. Importantly, he didn’t claim that all people were equal, but rather only that their happiness mattered equally.

Times have changed. Over the last century, as civil rights have advanced, the weaknesses of utilitarianism in practice have been exposed time and time again. Utilitarian reasoning has increasingly been seen as hindering social progress, rather than advancing it. For example, utilitarian arguments were used by Whites in apartheid South Africa, who claimed that all South Africans were better-off under White rule, and that a mixed government would lead to social decline as it had in other African nations. Utilitarian reasoning has been used widely by capitalist nations in the form of trickle-down economics. The theory being that the benefits of tax-breaks for the wealthy drive economic growth and ‘trickle-down’ to the rest of the population. But evidence suggests that trickle-down economic policies in more recent decades have done more damage than good, increasing national debt and fuelling income inequality. Utilitarian principles have also been tested in the debate over torture, capturing a rather callous conviction, one where the ‘means justify the ends’.

Historian and author, Yuval Noah Harari has eloquently abstracted this problem. He argues that historically, decentralization of power and efficiency have aligned; so much so, that many of us cannot think of democracy as being capable of failing, to more totalitarian regimes. But in this new age, data is power. We can train enormous models, that require vast amounts of data, to process people en masse, organise and sort them. And importantly, one does not have to have a perfect system in order to have an impact because of the scale on which they can be deployed. The question Yuval poses is, might the benefits of centralised data, offer a great enough advantage, to tip the balance of efficiency, in favour of more centralised models of power?

1.2.2 Justice as Fairness

In his theory Justice As Fairness[2] [2] J. Rawls, Justice as fairness: A restatement. Cambridge, Mass.: Harvard University Press, 2001. , John Rawls takes a different approach. He describes an idealised democratic framework, based on liberal principles and explains how unified laws can be applied (in a free society made up of people with disparate world views) to create a stable sociopolitical system. One where citizens would not only freely co-operate, but further advocate. He described a political conception of justice which would:

grant all citizens a set of basic rights and liberties

give special priority to the aforementioned rights and liberties over demands to further the general good, e.g. increasing the national wealth

assure all citizens sufficient means to make use of their freedoms.

The special priority given to the basic rights and liberties in the political conception of justice contrasts with a utilitarian doctrine. Here constraints are placed on how benefits can be distributed among the population and a strategy for determining some minimum.

Principles of Justice as Fairness

Liberty principle: Each person has the same indefeasible claim to a fully adequate scheme of equal basic liberties, which is compatible with the same scheme of liberties for all;

Equality principle: Social and economic inequalities are to satisfy two conditions:

Fair equality of opportunity: The offices and positions to which they are attached are open to all, under conditions of fair equality of opportunity;

Difference (maximin) principle They must be of the greatest benefit to the least-advantaged members of society.

The principles of Justice as Fairness are ordered by priority so that fulfilment of the liberty principle takes precedence over the equality principles and fair equality of opportunity takes precedence over the difference principle.

The first principle grants basic rights and liberties to all citizens which are prioritised above all else and cannot be traded for other societal benefits. It’s worth spending a moment thinking about what those rights and liberties look like. They are the the basic needs that are important for people to be free, to have choices and the means to pursue their aspirations. Today many of what Rawls considered to be basic rights and liberties are allocated algorithmically; education, employment, housing, healthcare, consistent treatment under the law to name a few.

The second principle requires positions to be allocated meritocratically, with all similarly talented (with respect to the skills and competencies required for the position) individuals having the same chance of attaining such positions i.e. that allocation of such positions should be independent of social class or background. We will return to the concept of equality of opportunity in chapter 3 when discussing Group Fairness.

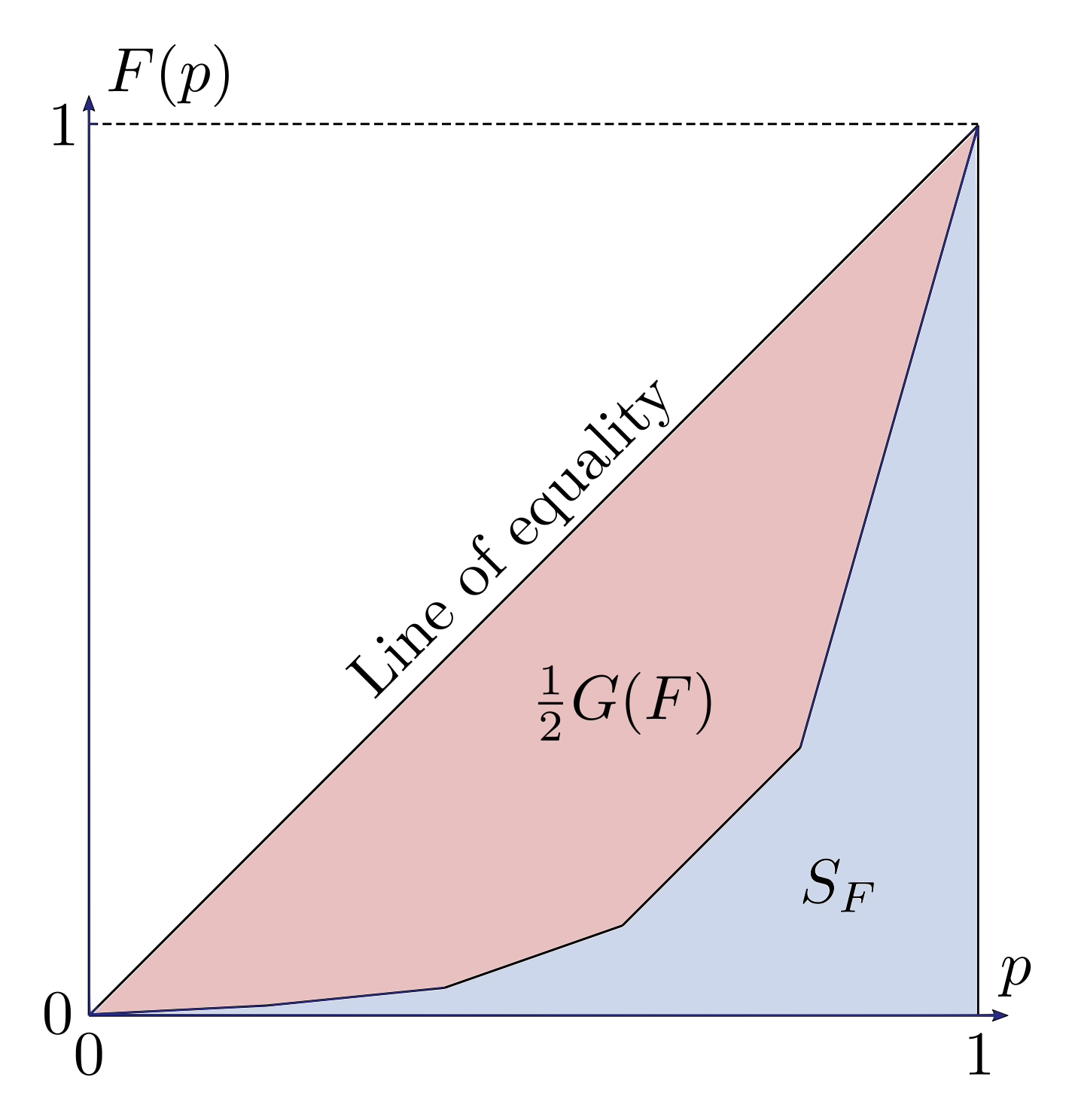

The third principle acts to prevent redistribution of social and economic currency from the rich to the poor by requiring that inequalities are of maximal benefit to the least advantaged in a society, also described as the maximin principle. In this principle, Rawls does not take the simplistic view that inequality and fairness are mutually exclusive but rather concisely articulates when the existence of inequality becomes unfair. In a sense Rawls opposes utilitarian thinking (that everyone matters equally) in prioritising the least advantaged. We shall return to maximin principle when we look at the use of inequality indices to measure algorithmic unfairness in a later chapter.

1.3 A Legal Perspective

It’s important to remember that anti-discrimination laws are the result of long-standing and systemic discrimination against oppressed people. Their existence is a product of history; subjugation, genocide, civil war, mass displacement of entire communities, racial hierarchies and segregation, supremacist policies (exclusive access to publicly funded initiatives), voter suppression and more. The law provides an important historical record of what we as a society deem fair and unfair, but without history there is no context. The law does not define the benchmark for fairness. Laws vary by jurisdiction and change over time and in particular they often do not adequately recognise or address issues related to discrimination that are known and accepted by the sciences (social, mathematical, medical,...).

In this section we’ll look at the history, practical application and interpretation of the law in the US (acknowledging the narrow scope of our discussion) Finally, we’ll take a brief look at what might be on the legislative horizon for predictive algorithms, based on more recent global developments.

1.3.1 A Brief History of Anti-discrimination Law in the US

Anti-discrimination laws in the US rest on the 14th amendment to the constitution which grants citizens equal protections of the law. Class action law suit Brown v Board (of Education of Topeka, Kansas) was a landmark case which in 1954, legally ended racial segregation in the US. Justices ruled unanimously that racial segregation of children in public schools was unconstitutional, establishing the precedent that “separate-but-equal” was, in fact, not equal at all. Though Brown v Board did not end segregation in practice, resistance to it in the south fuelled the civil rights movement. In the years that followed the NAACP (National Association for the Advancement of Coloured People) challenged segregation laws. In 1955, Rosa parks refusing to give up her seat on a bus in Montgomery (Alabama) led to sit ins and boycotts, many of them led by Martin Luther King Jr. The resulting Civil rights act of 1964 eventually brought an end to “Jim Crow” laws which barred Blacks from sharing buses, schools and other public facilities with Whites.

After the violent attack by Alabama state troopers on participants of a peaceful march from Selma to Montgomery was televised, The Voting Rights Act of 1965 was passed. It overcame many barriers (including literacy tests), at state and local level, used to prevent Black people from voting. Before this incidents of voting officials asking Black voters to “recite the entire Constitution or explain the most complex provisions of state laws”[3] [3] P. L. B. Johnson, “Speech to a joint session of congress on march 15, 1965,” Public Papers of the Presidents of the United States, vol. I, entry 107, pp. 281–287, 1965. in the south were common place.

In the years following the second world war, there were many attempts to pass an Equal Pay Act. Initial efforts were led by unions who feared men’s salaries would be undercut by women who were paid less for doing their jobs during the war. By 1960, women made up 37% of the work force but earned on average 59 cents for each dollar earned by men. The Equal Pay Act was eventually passed in 1963 in a bill which endorsed “equal pay for equal work”. Laws for gender equality were strengthened the following year by the Civil Rights Act of 1964.

Throughout the 1800’s the American federal government displaced Native American communities to facilitate White settlement. In 1830 the Indian Removal Act was passed in order to relocate hundreds of thousands of Native Americans. Over the following two decades, thousands of those forced to march hundreds of miles west on the perilous “Trail of Tears” died. By the middle on the century, the term “manifest destiny” was popularised to describe the belief that White settlement in North America was ordained by God. In 1887, the Dawes Act laid the groundwork for the seizing and redistribution of reservation lands from Native to White Americans. Between 1945 and 1968 the federal government terminated recognition of more than 100 tribal nations placing them under state jurisdiction. Once again Native Americans were relocated, this time from reservations to urban centres.

In addition to displacing people of colour, the federal government also enacted policies that reduced barriers to home ownership almost exclusively for White citizens - subsidizing the development of prosperous "White Caucasian" tenant/owner only suburbs, guaranteeing mortgages and enabling access to job opportunities by building highway systems for White commuters, often through communities of colour, simultaneously devaluing the properties in them. Even government initiatives aimed at helping veterans of World War II to obtain home loans accommodated Jim Crow laws allowing exclusion of Black people. In the wake of the Vietnam war, just days after the assassination of Martin Luther King J, the Fair Housing Act of 1968 was passed, prohibiting discrimination concerning the sale, rental and financing of housing based on race, religion, national origin or sex.

The Civil Rights Act of 1964 acted as a catalyst for many other civil rights movements, including those protecting people with disabilities. The Rehabilitation Act (1973) removed architectural, structural and transportation barriers and set up affirmative action programs. The Individuals with Disabilities Education Act (IDEA 1975) required free, appropriate public education in the least restrictive environment possible for children with disabilities. The Air Carrier Access Act (1988) which prohibited discrimination on the basis of disability in air travel and ensured equal access to air transportation services. The Fair Housing Amendments Act (1988) prohibited discrimination in housing against people with disabilities.

Title IX of the education amendments of 1972 prohibits federally funded educational institutions from discriminating against students or employees based on sex. The law ensured that schools (elementary to university level) that were recipients of federal funding (nearly all schools) provided fair and equal treatment of the sexes in all areas, including athletics. Before this few opportunities existed for female athletes. The National Collegiate Athletic Association (NCAA) offered no athletic scholarships for women and held no championships for women’s teams. Since then the number of female college athletes has grown five fold. The amendment is credited with decreasing dropout rates and increasing the numbers of women gaining college degrees.

The Equal Credit Opportunity Act was passed in 1974 when discrimination against women applying for credit in the US was rife. It was common practice for mortgage lenders to discount incomes of women that were of ’child bearing’ age or simply deny credit to them. Two years later the law was amended to prohibit lending discrimination based on race, color, religion, national origin, age, the receipt of public assistance income, or exercising one’s rights under consumer protection laws.

In 1978, congress passed the Pregnancy Discrimination Act in response to two Supreme Court cases that ruled that excluding pregnancy related disabilities from disability benefit coverage was not gender based discrimination, and did not violate the equal protection clause.

Table 1.1 shows a (far from exhaustive) summary of regulated domains with corresponding US legislation. Note that legislation in these domains extend to marketing and advertising not just the final decision.

| Domain | Legislation |

|---|---|

| Finance | Equal Credit Opportunity Act |

| Education | Civil Rights Act (1964) |

| Education Amendment (1972) | |

| IDEA (1975) | |

| Employment | Equal Pay Act(1963) |

| Civil Rights Act (1964) | |

| Housing | Fair Housing Act (1968) |

| Fair Housing Amendments Act (1988) | |

| Transport | Urban Mass Transit Act (1970) |

| Rehabilitation Act (1973) | |

| Air Carrier Access Act (1988) | |

| Public accommodationa | Civil Rights Act (1964) |

aPrevents refusal of customers.

Table 1.2 provides a list of protected characteristics under US federal law with corresponding legislation (again not exhaustive).

| Protected Characteristic | Legislation |

|---|---|

| Race | Civil Rights Act (1964) |

| Sex | Equal Pay Act (1963) |

| Civil Rights Act (1964) | |

| Pregnancy Discrimination Act (1978) | |

| Religion | Civil Rights Act (1964) |

| National Origin | Civil Rights Act (1964) |

| Citizenship | Immigration Reform & Control Act |

| Age | Age Discrimination in Employment Act (1967) |

| Familial status | Civil Rights Act (1968) |

| Disability status | Rehabilitation Act of 1973 |

| American with Disabilities Act of 1990 | |

| Veteran status | Veterans’ Readjustment Assistance Act 1974 |

| Uniformed Services Employment & Reemployment Rights Act | |

| Genetic Information | Civil Rights Act(1964) |

1.3.2 Application and Interpretation of the Law

To get an idea of how anti-discrimination laws are be applied in practice and how they might translate to algorithmic decision making, we look at Title VII of the Civil rights act of 1964 in the context of employment discrimination[4] [4] S. Barocas and A. D. Selbst, “Big data’s disparate impact,” Calif Law Rev., vol. 104, pp. 671–732, 2016. . Legal liability for discrimination against protected classes can be established as disparate treatment and/or disparate impact. Disparate treatment (also described as direct discrimination in Europe) refers to both differing treatment of individuals based on protected characteristics, and intent to discriminate. Disparate impact (or indirect discrimination in Europe) does not consider intent but addresses policies and practices that disproportionately impact protected classes.

Disparate Treatment

Disparate treatment effectively prohibits rational prejudice (backed by data showing the protected feature to be correlated) as well as denial of opportunities based on protected characteristics. For an algorithm, it effectively prevents the use of protected characteristics as inputs. It’s noteworthy that in the case of disparate treatment, the actual impact of using the protected features on the outcome is irrelevant; so even if a company could show that the target variable produced by their model had zero correlation with the protected characteristic, the company would still be liable for disparate treatment. This fact is somewhat bizarre given that not using the protected feature in the algorithm provides no guarantee that the algorithm is not biased in relation to it. Indeed an organisation could very well use their data to predict the protected characteristic.

In an effort to avoid disparate treatment liability, many organisations do not even collect data relating to protected characteristics, leaving them unable to accurately measure, let alone address, bias in their algorithms, even if they might want toIn fact, I met a data scientist at a conference, who was working for a financial institution, that said her team was trying to predict sensitive features such as race and gender in order to measure bias in their algorithms.

. In summary, disparate treatment as applied today does not resolve the problem of unconscious discrimination against disadvantaged classes through their use of machine learning algorithms. Further it acts as a deterrent to ethically minded companies that might want to measure the biases in their algorithms.

Disparate treatment

Suppose a company predicts the sensitive feature and uses this as an input to its model. Should this be considered disparate treatment?

What about the case where the employer implements an algorithm, finds out that it has a disparate impact, and uses it anyway? Doesn’t that become disparate treatment? No it doesn’t and in fact, somewhat surprisingly, deciding not to apply it upon noting the disparate impact could result in a disparate treatment claim in the opposite direction[5] [5] “Ricci v. DeStefano, 557 U.S. 557.” 2009. . We’ll return to this later. Okay, so what about disparate impact?

Disparate Impact

In order to establish a violation, it is not enough to simply show that there is a disparate impact, but it must also be shown either that there is no business justification for it, or if there is, that the employer refuses to use another, less discriminatory, means of achieving the desired result. So how much of an impact is enough to warrant a disparate impact claim? There are no rules here only guidelines. The Uniform Guidelines on Employment selection procedures from the Equal Employment Opportunity Commission (EEOC) provides a guideline that if the selection rate from one protected group is less than four fifths of that from another, it will generally be regarded as evidence of adverse impact, though it also states that the threshold would depend on the circumstances.

Assuming the disparate impact is demonstrated, the issue becomes proving business justification. The requirement for business justification has softened in favour of the employer over the years; treated as “business necessity”[6] [6] “Griggs v. Duke Power Co., 401 U.S. 424.” 1971. earlier on and later interpreted as “business justification”[7] [7] “Wards Cove Packing Co. v. Atonio, 490 U.S. 642.” 1989. . Today, it’s generally accepted that business justification lies somewhere between the extremes of “job-relatedness” and “business necessity”. As a concrete example of disparate impact and taking the extreme of job-relatedness - the EEOC along with several federal courts have determined that discrimination on the sole basis of a criminal record to be a violation under disparate impact unless the particular conviction is related to the role, because Non-White applicants are more likely to have a criminal conviction.

For a machine learning algorithm, business justification boils down to the question of job-relatedness of the target variable. If the target variable is improperly chosen, a disparate impact violation can be established. In practice however the courts will accept most plausible explanations of job-relatedness since not accepting it would set a precedent that it is determined discriminatory. Assuming the target variable to be proven job-related then, there is no requirement to validate the model’s ability to predict said trait, only a guideline which sets a low bar (a statistical significance test showing that the target variable correlates with the trait) and which the court is free to ignore.

Assuming business justification is proven by the employer, the final burden then falls on the plaintiff to show that the employer refused to use a less discriminatory “alternative employment practice”. If the less discriminatory alternative would incur additional cost (as is likely) would this be considered refusing? Likely not.

While on the surface, disparate impact might seem like a solution, the current framework of a weak business justification (in terms of a plausible target variable) and the employer refusing an alternative employment practice with no requirement to validate the model offers little resolve. Clearly there is need for reform.

Anti-classification versus Anti-subordination

Just as the meaning of fairness is subjective so is the interpretation of anti-discrimination laws. At one extreme, anti-classification holds the weaker interpretation, that the law is intended to prevent classification of people based on protected characteristics. At the other extreme, anti-subordination defines the stronger stance, that anti-discrimination laws exist to prevent social hierarchies, class or caste systems based on protected features and, that it should actively work to eliminate them where they exist. An important ideological difference between the two schools of thought is in the application of positive discrimination policies. Under anti-subordination principles, one might advocate for affirmative action as a means to bridge gaps in access to employment, housing, education and other such pursuits, that are a direct result of historical systemic discrimination against particular groups. A strict interpretation of the anti-classification principle would prohibit such actions. Both anti-classification and anti-subordination ideologies have been argued and upheld in landmark cases.

In 2003, the Supreme Court held that a student admissions process that favours “under-represented minority groups” does not violate the Fourteenth Amendment[8] [8] “Grutter v. Bollinger, 539 U.S. 306.” 2003. , provided it evaluated applicants holistically at an individual level. The same year, the New Haven Fire Department administered a two part test in order to fill 15 openings. Examinations were governed in part by the City of New Haven. Under the city charter, civil service positions must be filled by one of the top three scoring individuals. 118 (White, Black and Hispanic) fire fighters took the exams. Of the resulting 19 candidates who scored highest on the tests and could the considered for the positions, none were Black. After heated public debate and under threat of legal action either way, the city threw out the test results. This action was later determined to be a disparate treatment violation. In 2009, the court ruled that disparate treatment could not be used to avoid disparate impact without sufficient evidence of liability of the latter[5]. This landmark case was the first example of conflict between the two doctrines of disparate impact and disparate treatment or anti-classification and anti-subordination.

Disparate treatment seems to align well with anti-classification principles, seeking to prevent intentional discrimination based on protected characteristics. In the case of disparate impact, things are less clear. Is it a secondary ‘line of defence’ designed to weed out well masked intentional discrimination? Or is its intention to address inequity that exists as a direct result of historical injustice? One can draw parallels here with the ‘business necessity’ versus ‘business justification’ requirements discussed earlier.

1.3.3 Future Legislation

In May 2018, the European Union (EU) brought into action the General Data Protection (GDPR) a legal framework around the protection of personal data of EU citizens. The framework is divided into binding and non-binding recitals. The regulation sets provisions for processing of data in relation to decision making, described as ‘profiling’ under recital 71[9] [9] “General Data Protection Regulation (GDPR): (EU) 2016/679 Recital 71.” 2016. . Though currently non-binding, it provides an indication of what’s to come. The recital talks specifically about having the right not to be subject to decisions based solely on automated processing. It specifically talks about credit applications, e-recruiting and any system which analyses or predicts aspects of a persons performance at work, economic situation, health, personal preferences or interests, reliability or behaviour, location or movements. The recital also talks about requirements around using “appropriate mathematical or statistical procedures” to prevent “discriminatory effects on natural persons on the basis of racial or ethnic origin, political opinion, religion or beliefs, trade union membership, genetic or health status or sexual orientation”. More recently in 2021, the EU has proposed taking a risk based approach to the question of which technologies should be regulated, dividing it into four categories. Unacceptable risk, high risk, limited risk, minimal risk[10] [10] “Europe fit for the Digital Age: Commission proposes new rules and actions for excellence and trust in Artificial Intelligence.” 2021. . While things may change as the proposed law is debated but once agreed, it’s not unlikely that it will serve as a prototype for legislation in the U.S. (and other countries around the world), as did GDPR.

In April 2019, the Algorithmic Accountability Act was proposed to the US Senate. The bill requires specified commercial entities to conduct impact assessments of automated decision systems and specifically states that assessments must include evaluations and risk assessment in relation to “accuracy, fairness, bias, discrimination, privacy, and security” not just for the model output but for the training data. The bill has cosponsors in 22 states and has been referred to the Committee on Commerce, Science, and Transportation for review. These examples are clear indications that the issues of fairness and bias in automated decision making systems are on the radar of regulators.

1.4 A Technical Perspective

The problem of distinguishing correlation from causation is an important one in identifying bias. Using illustrative examples of Simpson’s paradox, we demonstrate the danger of assuming causal relationships based on observational data.

1.4.1 Simpson’s Paradox

In 1973, University of California, Berkeley received approximately 15,000 applications for the fall quarter[11] [11] P. J. Bickel, E. A. Hammel, and J. W. O’Connell, “Sex bias in graduate admissions: Data from berkeley,” Science, vol. 187, Issue 4175, pp. 398–404, 1975. . At the time it was made up of 101 departments. 12,763 applications reached the decision stage. Of these 8442 were male and 4321 were female. The acceptance rates for the applicants were 44% and 35% respectively (see Table 1.3).

| Gender | Admitted | Rejected | Total | Acceptance Rate |

|---|---|---|---|---|

| Male | 3738 | 4704 | 8442 | 44.3% |

| Female | 1494 | 2827 | 4321 | 34.6% |

| Aggregate | 5232 | 7531 | 12763 | 41.0% |

With a whopping 10% difference in acceptance rates, it seems a likely case of discrimination against women. Indeed, a \(\chi^2\) hypothesis test for independence between the variables (gender and application acceptance) reveals that the probability of observing such a result or worse, assuming they are independent, is \(6\times10^{-26}\). A strong indication that they are not independent and therefore evidence of bias in favour of male applicants. Since admissions are determined by the individual departments, it’s worth trying to understand which departments might be responsible. We focus on the data for the six largest departments, shown in Table 1.4. Here again we see a similar pattern. There appears to be bias in favour of male applicants, and a \(\chi^2\) test shows that the probability of seeing this result under the assumption of independence is \(1\times10^{-21}\). It looks like we have quickly narrowed down our search.

| Gender | Admitted | Rejected | Total | Acceptance Rate |

|---|---|---|---|---|

| Male | 1198 | 1493 | 2691 | 44.5% |

| Female | 557 | 1278 | 1835 | 30.4% |

| Aggregate | 1755 | 2771 | 4526 | 38.8% |

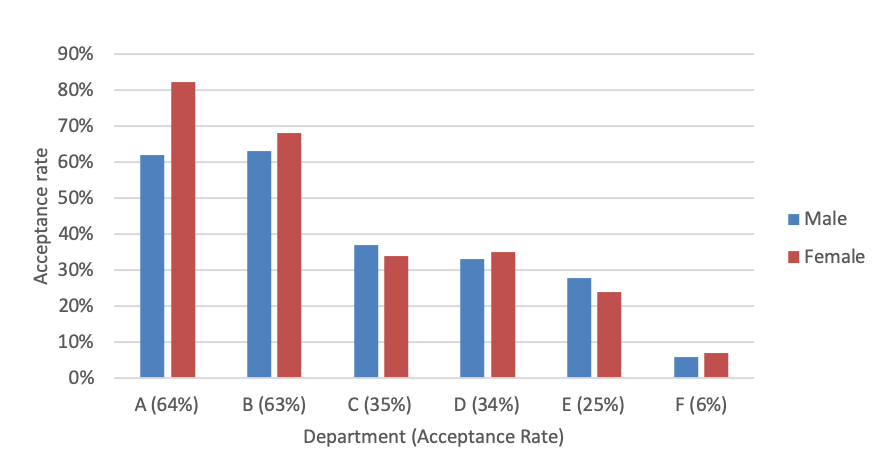

Figure 1.1 shows the acceptance rates for each department by gender, in decreasing order of acceptance rates. Performing \(\chi^2\) tests for each department reveals the only department where there is strong evidence of bias is A, but the bias is in favour of female applicants. The probability of observing the data for department A, under the assumption of independence, is \(5\times10^{-5}\).

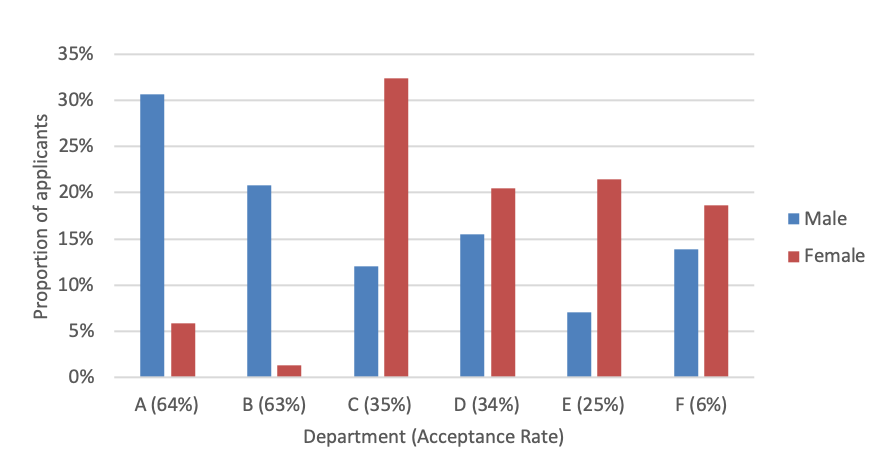

So what’s going on? Figure 1.2 shows the application distributions for male and female applicants for each of the six departments. From the plots we are able to see a pattern. Female applicants are more often applying for departments with a lower acceptance rate.

In other words a larger proportion of the women are being filtered out overall, simply because they are applying to departments that are harder to get into.

This is a classic example of Simpson’s Paradox (also known as the reversal paradox and Yule-Simpson effect). We have an observable relationship between two categorical variables (in this case gender and acceptance) which disappears or reverses, after controlling for one or more other variables (in this case department). Simpson’s Paradox is a special case of so called association paradoxes (where the variables are categorical, and the relationship changes qualitatively), but the same rules also apply to continuous variables. The marginal (unconditional) measure of association (e.g. correlation) between two variables need not be bounded by the partial (conditional) measures of association (after controlling for one or more variables). Although Edward Hugh Simpson famously wrote about the paradox in 1951, it was not discovered by him. In fact, it was reported by George Udny Yule as early as 1903. The association paradox for continuous variables was demonstrated by Karl Pearson in 1899.

Let’s discuss another quick example. A 1996 follow-up study on the effects of smoking recorded the mortality rate for the participants over a 20 year period. They found higher mortality rates among the non-smokers, 31.4% compared to 23.9% which, in itself, might imply a considerable protective affect from smoking. Clearly there’s something fishy going on. Disaggregating the data by age group showed that the mortality rates were higher for smokers in all but one of them. Looking at the age distribution of the populations of smokers and non-smokers, it’s apparent that the age distribution of the non-smoking group is more positively skewed, and so they are older on average. This concords with the rationale that non-smokers live longer - hence the difference in age distributions of the participants.

1.4.2 Causality

In both the above examples, it appears that the salient information is found in the disaggregated data (we’ll come back to this later). In both cases it is the disaggregated data that enables us to understand the true nature of the relationship between the variables of interest. As we shall see in this section, this need not be the case. To show this, we discuss two examples. In each case, the data is identical but the variables is not. The examples are those Simpson gave in his original 1951 paper[12] [12] E. Simpson, “The interpretation of interaction in contingency tables,” Journal of the Royal Statistical Society, vol. Series B, 13, pp. 238–241, 1951. .

Suppose we have three binary variables, \(A\), \(B\) and \(C\), and we are interested in understanding the relationship between \(A\) and \(B\) given a set of 52 data points. A summary of the data showing the association between variables \(A\) and \(B\) are shown in Table 1.5, first for all the data points and then stratified (separated) by the value of \(C\) (note the first table is the sum of the latter two). The first table indicates that \(A\) and \(B\) are unconditionally independent (since changing the value of one variable does not change the distribution of the other). The next two tables suggest \(A\) and \(B\) are conditionally dependent given \(C\).

| Stained? / Male? | ||||||||

|---|---|---|---|---|---|---|---|---|

| \(C=1\) | \(C=0\) | |||||||

| Black?/ Died? | Plain?/ Treated? | Black?/ Died? | Plain?/ Treated? | |||||

| \(A=1\) | \(A=0\) | \(A=1\) | \(A=0\) | \(A=1\) | \(A=0\) | |||

| \(B=1\) | 20 | 6 | \(B=1\) | 5 | 3 | 15 | 3 | |

| \(B=0\) | 20 | 6 | \(B=0\) | 8 | 4 | 12 | 2 | |

| \(\mathbb{P}(B|A)\) | 50% | 50% | \(\mathbb{P}(B|A,C)\) | 38% | 43% | 56% | 60% | |

aEach cell of the table shows the number of examples in the dataset satisfying the conditions given in the corresponding row and column headers.

Question:

Which distribution gives us the most relevant understanding of the association between \(A\) and \(B\), the marginal (i.e. unconditional) \(\mathbb{P}(A,B)\) or conditional distribution \(\mathbb{P}(A,B|C)\)? To show that causal relationships matter, we consider two different examples.

Example a) Pack of Cards (Colliding Variable)

Suppose the population is a pack of cards. It so happens that baby Milen has been messing about with the cards and made some dirty in the process. Let’s summarise our variables,

\(A\) tells us the character of the card, either plain (\(A=1\)) or royal (King, Queen, Jack; \(A=0\)).

\(B\) tells us the colour of the card, either black (\(B=1\)) or red (\(B=0\)).

\(C\) tells us if the card is dirty (\(C=1\)) or clean (\(C=0\)).

In this case, the aggregated data showing \(\mathbb{P}(A,B)\) is relevant since the cleanliness of the cards \(C\) has no bearing on the association between the character \(A\) and colour \(B\) of the cards.

Example b) Treatment Effect on Mortality Rate (Confounding Variable)

Next, suppose that the data relates to the results of medical trials for a drug on a potentially lethal illness. This time,

\(A\) tells us if the subject was treated (\(A=1\)) or not (\(A=0\)).

\(B\) tells us if the subject died (\(B=1\)) or recovered (\(B=0\)).

\(C\) tells us the gender of the subject, either male (\(C=1\)) or female (\(C=0\)).

In this case the disaggregated data shows the more relevant association, \(\mathbb{P}(A,B|C)\). From it, we can see that female patients are more likely to die than males overall; 56 and 60% versus 38 and 43%, depending on if they were treated or not. In both cases we see that treatment with the drug \(A\) reduces the mortality rate for both male and female participants, and the effect is obscured by aggregating the data over gender \(C\).

Back to Causality

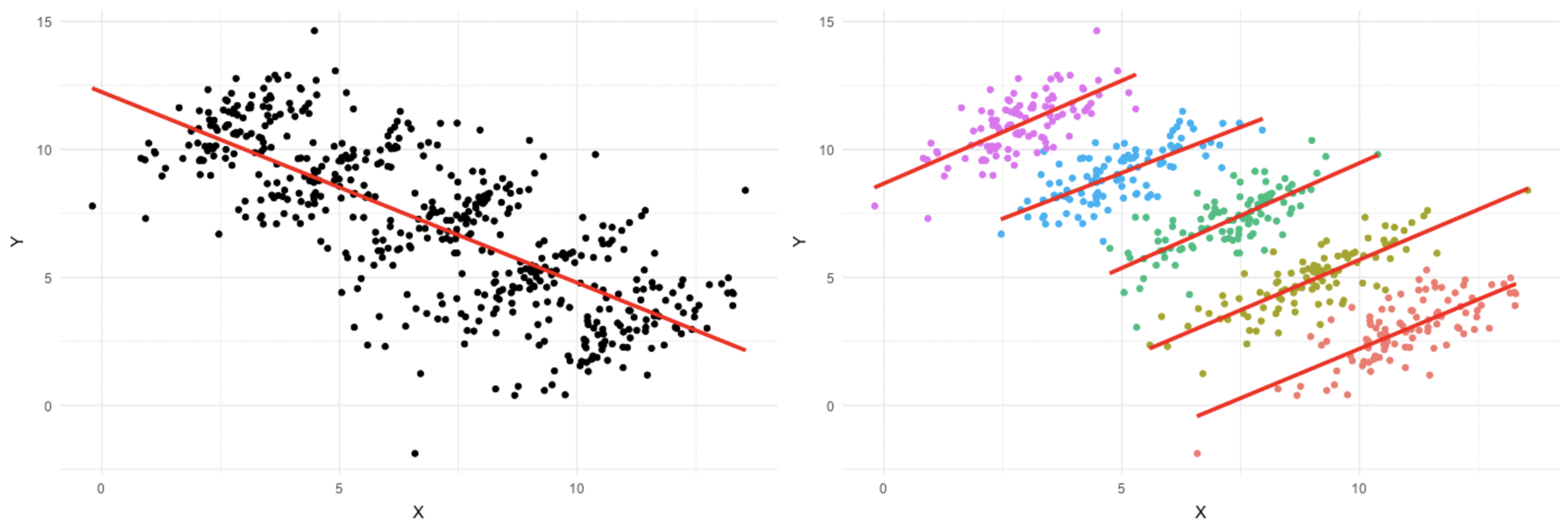

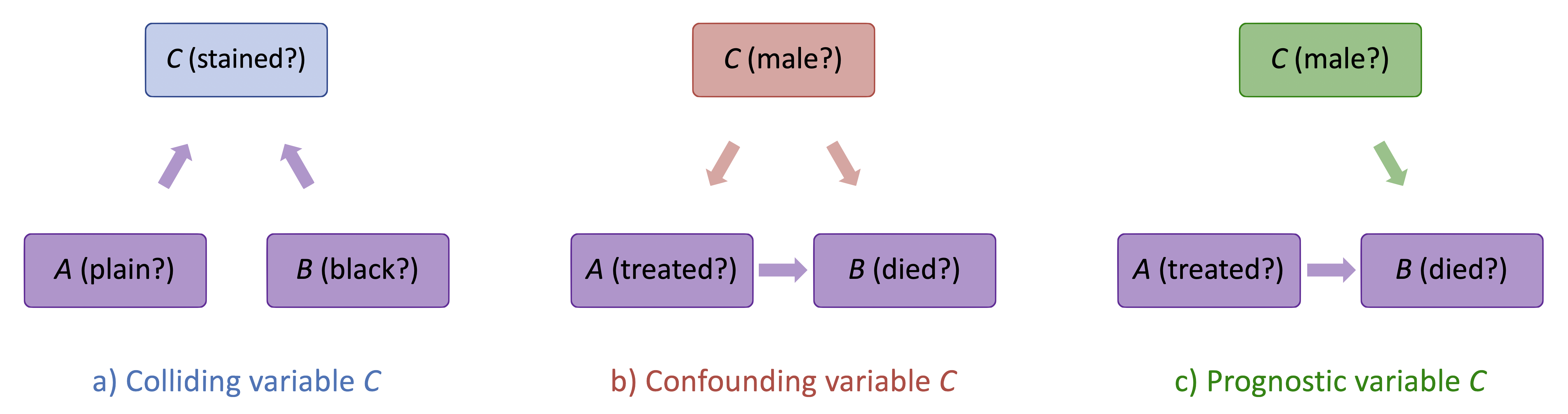

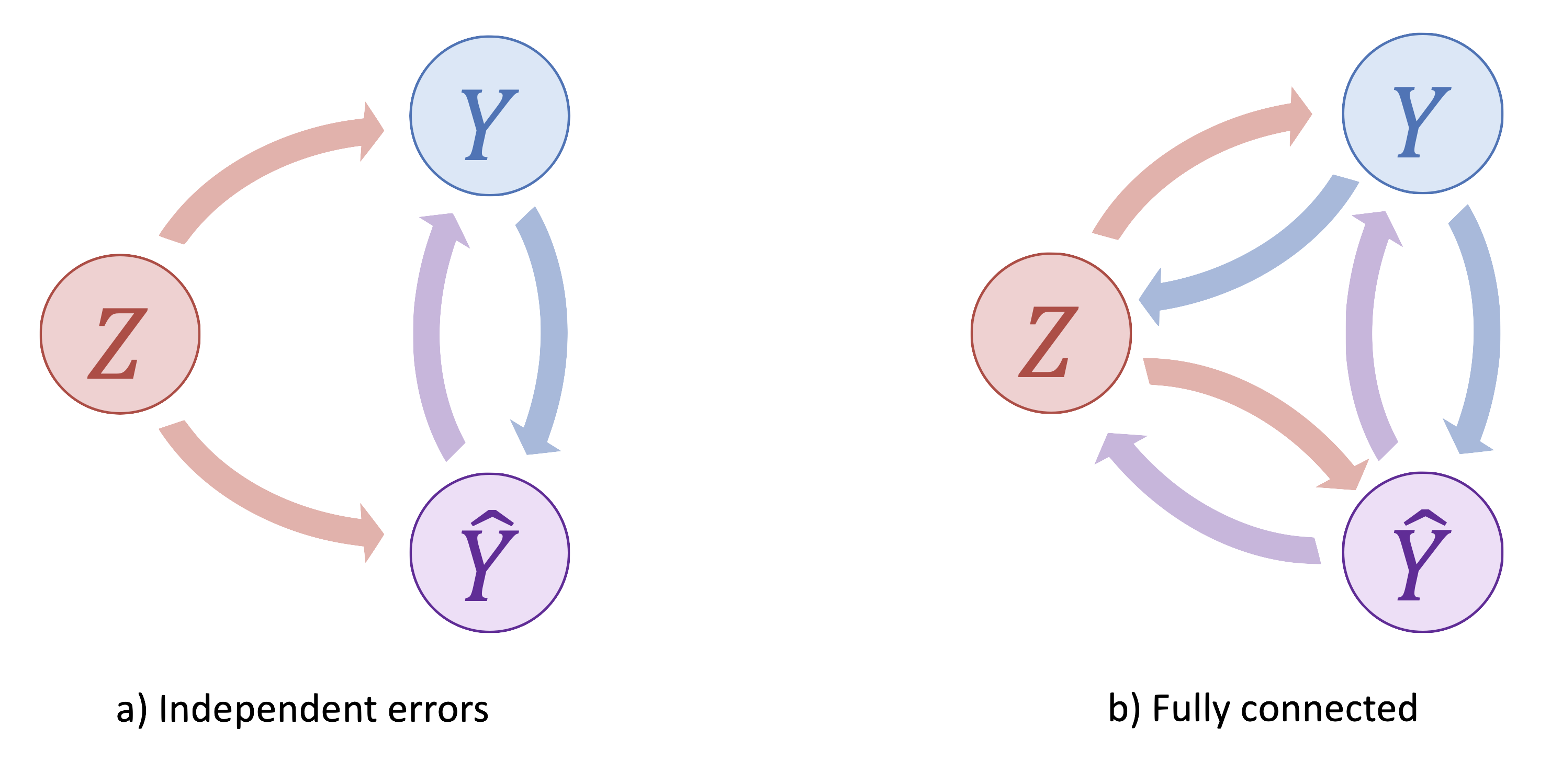

The key difference between these examples is the causal relationship between the variables rather than the statistical structure of the data. In the first example with the playing cards, the variable \(C\) is a colliding variable, in the second example looking at patient mortality, it is a confounding variable. Figure 1.4 a) and b) show the causal relationships between the variables in the two cases.

The causal diagram in Figure 1.4 a) shows the variables \(A\), \(B\) and \(C\) for the first example. The arrows exist both from card character and colour to cleanliness because apparently, baby Milen had a preference for royal cards over plain and red cards over black. Conditioning on a collider \(C\) generates an association (e.g. correlation) between \(A\) and \(B\), even if they are unconditionally independent. This common effect is often observed as selection or representation bias. Representation bias can induce correlation between variables, even where there is none. For decision systems, this can lead to feedback loops that increase the extremity of the representation bias in future data. We’ll come back to this in chapter 2, when we talk about common causes of bias.

The causal diagram in Figure 1.4 b) shows the variables \(A\), \(B\) and \(C\) for the second example. The arrows exist from \(gender\) to treatment because men were less likely to be treated, and from gender to death because men were also less likely to die. The arrow from \(A\) to \(B\) represents the effect of treatment on mortality which is observable only by conditioning on gender. Note that there are two sources of association in opposite directions between variables \(A\) and \(B\) (treatment and death); a positive association, because men were less likely to be treated; and a negative association, because male patients are less likely to die. The two effects cancel each other out when the data is aggregated.

We see through the discussion of these two examples, that statistical reasoning is not sufficient to be able to determine which of the distributions (marginal or conditional) are relevant. Note that the above conclusions in relation to colliding and confounding variables does not generalize to complex time varying problems.

Before moving on from causality, we return to the example we discussed at the very start of this section. According to our analysis of the Berkeley admissions data, we concluded that the disaggregated data contained the salient information explaining the disparity in acceptance rates for male and female applicants. The problem is, we have only shown that application rates to be one of many possible causes of the differing acceptance rates (we cannot see outside of our data). In addition, we have not proven gender discrimination, not to be the cause. What we have evidenced, is the existence of disparities in both acceptance rates and application rates across sex. One problem is that gender discrimination is not a measurable thing in itself. It’s complicated. It is made up of many components, most of which are not contained in the data. Beliefs, personal preferences, behaviours, actions, and more. A valid question we cannot answer is, why do the application rates differ by sex? How do we know that this is itself, is not a result of gender discrimination. Perhaps some departments are less welcoming of women than others or, perhaps some are just much more welcoming of men than women? So how would we know if gender discrimination is at play here? We need to ask the right questions to collect the right data.

1.4.3 Collapsibility

We have demonstrated that correlation does not imply causation in the manifestation of Simpson’s Paradox. But there is second factor that can have an impact; and that is the nature of the measure of association in question.

Example c) Treatment Effect on Mortality Rate (Prognostic Variable)

Suppose that in the study of the efficacy of the treatment (in Example 2 above), we remedy the selection bias so that male and female patients are equally likely to be treated. We remove the causal relationship between variables \(A\) and \(C\) (treatment and gender). In this case, the variable \(C\) becomes prognostic rather than confounding. See Figure 1.4 c). In this case the decision as to which distributions (marginal or conditional) are most relevant would depend only on the target population in question. In the absence of the confounding variable in our study one might reasonably expect the marginal measure of association to be bounded by the partial measures of association. Such intuition is correct only if the measure of association is collapsible (that is, it can be expressed as the weighted average of the partial measures), not otherwise. Some examples of collapsible measures of association are the risk ratio and risk difference. The odds ratio however is not collapsible. If you don’t know what these are, don’t worry, we’ll return to them in chapter 3.

1.5 What’s the Harm?

In this section we discuss the recent and broader societal concerns related to machine learning technologies.

1.5.1 The Illusion of Objectivity

One of the most concerning things about the machine learning revolution, is perception that these algorithms are somehow objective (unlike humans), and are therefore a better substitute for human judgement. This viewpoint is not just a belief of laymen but an idea that is also projected from within the machine learning community. There are often financial incentives to exaggerate the efficacy of such systems.

Automation Bias

The tendency for people to favour decisions made by automated systems despite contradictory information from non-automated sources, or automation bias, is a growing problem as we integrate more and more machines in our decision making processes especially in infrastructure - healthcare, transportation, communication, power plants and more.

It is important to be clear that in general, machine learning systems are not objective. Data is produced by a necessarily subjective set of decisions (how and who to sample, how to group events or characteristics, which features to collect). Modelling also involves making choices about how to process the data, what class of model to use and perhaps most importantly how success is determined. Finally, even if our model is calibrated to the data well, it says nothing about the distribution of errors across the population. The consistency of algorithms in decision making compared to humans (who individually make decisions on a case by case basis) is often described as a benefitOne must not confuse consistency with objectivity. For algorithms, consistency also means consistently making the same errors.

, but it’s their very consistency that makes them dangerous - capable of discriminating systematically and at scale.

Example: COMPAS

(Correctional Offender Management Profiling for Alternative Sanctions) is a “case management system for criminal justice practitioners”. The system, produces recidivism risk scores. It has been used in New York, California and Florida, but most extensively in Wisconsin since 2012, at a variety of stages in the criminal justice, from sentencing to parole. The documentation for the software describes it as an “objective statistical risk assessment tool”.

In 2013, Paul Zilly was convicted of stealing a push lawnmower and some tools in Barron County, Wisconsin. The prosecutor recommended a year in county jail and follow-up supervision that could help Zilly with “staying on the right path.” His lawyer agreed to a plea deal. But Judge James Babler upon seeing Zilly’s COMPAS risk scores overturned the plea deal that had been agreed on by the prosecution and defence, and imposed two years in state prison and three years of supervision. At an appeals hearing later that year, Babler said “Had I not had the COMPAS, I believe it would likely be that I would have given one year, six months”[13] [13] J. Angwin, J. Larson, S. Mattu, and L. Kirchner, “Machine bias,” ProPublica, 2016. . In other words the judge believed the risk scoring system to hold more insight that the prosecutor who had personally interacted with the defendant.

The Ethics of Classification

The appeal of classification is clear. It creates a sense of order and understanding. It enables us to formulate problems neatly and solve them. An email is spam or it’s not; an x-ray shows tuberculosis or it doesn’t; a treatment was effective or it wasn’t. It can make finding things more efficient in a library or online. There are lots of useful applications of classification.

We tend to think of taxonomies as objective categorisations, but often they are not. They are snapshots in time, representative of the culture and biases of the creators. The very act of creating a taxonomy, can give life by existence to some individuals, while erasing others. Classifying people inevitably has the effect of reducing them to labels; labels that can result in people being treated as members of a group, rather than individuals; labels that can linger for much longer than they should (something it’s easy to forget when creating them). The Dewey Decimal System for example, was developed in the late 1800’s and widely adopted in the 1930’s to classify books. Until 2015, it categorised homosexuality as a mental derangement.

Classification of People

From the 1930’s until the second world war, machine classification systems were used by Nazi Germany to process census data in order to identify and locate Jews, determine what property and businesses they owned, find anything of value that could be seized and finally to send them to their deaths in concentration camps. Classification systems have often been entangled with political and social struggle across the world. In Apartheid South Africa, they were been used extensively in many parts of the world to enforce social and racial hierarchies that determined everything from where people could live and work to whom they could marry. In 2019 it was estimated that some half a million Uyghurs (and other minority Muslims) are being held in internment camps in China without charge for the purposes of countering extremism and promoting social integration.

Recent papers on detecting criminality”[14] [14] X. Wu and X. Zhang, “Automated inference on criminality using face images.” 2017.Available: https://arxiv.org/abs/1611.04135 and sexuality[15] [15] Y. Wang and M. Kosinski, “Deep neural networks are more accurate than humans at detecting sexual orientation from facial images,” Journal of Personality and Social Psychology, 2018. and ethnicity[16] [16] C. Wang, Q. Zhang, W. Liu, Y. Liu, and L. Miao, “Facial feature discovery for ethnicity recognition,” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 2018. from facial images have sparked controversy in the academic community. The latter in particular looks for facial features that identify among others, Chinese Uyghurs. Physiognomy (judging character from the physical features of a persons face) and phrenology (judging a persons level of intelligence from the shape and dimensions of their cranium) have historically been used as pseudo-scientific tools of oppressors, to prove the inferiority races and justify subordination and genocide. it is not without merit then to ask if some technologies should be built at all. Machine gaydar might be a fun application to mess about with friends for some, but in the 70 countries where homosexuality is still illegal (some of which enforce the death penalty) it is something rather different.

1.5.2 Personalisation and the Filter Bubble

Many believed the internet would breath new life into democracy. The decreased cost and increased accessibility of information would result in greater decentralization of power and flatter social structures. In this new era, people would be able to connect, share ideas and organise grass roots movements at a such a scale that would enable a step change in the rate of social progress. Some of these ideas have been realised to an extent but the increased ability to create and distribute content and corresponding volume of data has created new problems. The amount of information available to us through the internet is overwhelming. Email, blog posts, Twitter, Facebook, Instagram, Linked In, What’s App, You Tube, Netflix, TikTok and more. Today there are seemingly endless ways and places for us to communicate and share information. This barrage of information has resulted in what has been described as the attention crash. There is simply too much information for us to attend to all of it meaningfully. The mechanisms through which we can acquire new information that demands our attention too have expanded. We carry our smart phones everywhere we go and sleep beside them. There is hardly a waking moment, when we are unplugged and inaccessible. The demands on our attention and focus have never been greater. Media producers themselves have adapted their content in order to accommodate our new shortened attention spans.

With so much information available it’s easy to see the appeal of automatic filtering and curation. And of course, how good would said system really be if it didn’t take into account our personal tastes and preferences? So what’s the problem?! Over the last decade, personalisation has become entrenched in the systems we interact with day to day. Targeted advertising was just the beginning. Now it’s not just the trainers you browsed once that follow you around the web until you buy them, it’s everything. Since 2009, Google has returned personalised results every time someone queries their search engine, so two people who enter the same text don’t get the same result. In 2021 You Tube had more than two billion logged-in monthly users. Three quarters of adults in the US use it (more than facebook and Instagram) and 80% of U.S. parents of children under 11 watch it. It is the second most visited site in the world, after Google with visitors checking on average just under 9 pages, and spending 42 minutes per day there. In 2018, 70% of the videos people watched on You Tube were recommended. Some 40% of Americans under thirty get their news through social networking sites such as twitter and Facebook but this may be happening without you even knowing. Since 2010, it’s not the Washington Post that decides which news story you see in the prime real estate that is the top right hand corner of their home page, it’s Facebook - the same goes for the New York Times. So the kinds of algorithms that once determined what we spent our money on now determine our very perception of the world around us. The only question is, what are they optimising for?

Ignoring, for a moment, the fact that having the power to shape people’s perception of the world, in just a few powerful hands is in itself a problem. A question worth pondering on is what kind of citizens people who only ever see things they ‘like’, or feel the impulse to ’comment’ on (or indeed any other proxy for interest/engagement/attention) would make. As Eli Pariser put it in his book The Filter Bubble, “what one seems to like may not be what one actually wants, let alone what one needs to know to be an informed member of their community or country”. The internet has made the world smaller and with it we’ve seen great benefits. But the idea that, because anyone (regardless of their background) could be our neighbour, people would find common ground has not been realised to the extent people hoped. In some senses personalisation does the exact opposite. It risks us all living in a world full of mirrors, where we only ever hear the voices of people who see the world as we do, being deprived of differing perspectives. Of course we have always lived in our own filter bubble in some respects but the thing that has changed is that now we don’t make the choice and often don’t even know when we are in it. We don’t know when or how decisions are made about what we should see. We are more alone in our bubbles than we have ever been before.

Social capital is created by the interpersonal bonds we build in shared identity, values, trust and reciprocity. It encourages people to collaborate in order to solve common problems for the common good. There are two kinds of social capital, bonding and bridging. Bonding capital is acquired through development of connections in groups that have high levels of similarity in demographics and attitudes - the kind you might build by, say socialising with colleagues from work. Bridging capital is created when people from different backgrounds (race, religion, class) connect - something that might happen at a town hall meeting say. The problem with personalisation is that by construction it reduces opportunities to see the world through the eyes of people who don’t necessarily look like us. It reduces bridging capital and that exactly the kind of social capital we need to solve wider problems that extend beyond our own narrow or short term self interests.

1.5.3 Disinformation

In June 2016, it was announced that Britain would be leaving the EU. 33.5 million people voted in the referendum of which 51.9% voted to leave. The decision that will impact the UK for, not just a term, but generations to come, rested on less than 2% of voters. Ebbw Vale is a small town in Wales where 62% of the electorate (the largest majority in the country) voted to leave. The town has a history in steel and coal dating back to the late 1700’s. By the 1930’s the Ebbw Vale Steelworks was the largest in Europe by volume. In the 1960’s it employed some 14,500 people. But, towards the end of the 1900’s, after the collapse of the UK steel industry, the town suffered one of the highest unemployment rates in Britain. What was strange about the overwhelming support to leave was that Ebbw Vale was perhaps one of the largest recipients of EU development funding in the UK. A £350m regeneration project funded by the EU replaced the industrial wasteland left behind when the steelworks closed in 2002 with The Works (a housing, retail and office space, wetlands, learning campus and more). A further £33.5 in funding from the European Social Fund paid for a new college and apprenticeships, to help young people learn a trade. An additional £30 million for a new railway line, £80 million for road improvements and shortly before the vote a further £12.2 million for other upgrades and improvements were all from the EU.

When journalist Carole Cadwalladr returned to the small town where she had grown up to report on why residents had voted so overwhelmingly in favour of leaving the EU, she was no less confused. It was clear how much the town had benefited from being part of the EU. The new road, train station, college, leisure centre and enterprise zones (flagged an EU tier 1 area, eligible for the highest level of grant aid in the UK), everywhere she went she saw signs with proudly displayed EU flags saying so. So she wandered around town asking people and was no less perplexed by their answers. Time and time again people complained about immigration and foreigners. They wanted to take back control. But the immigrants were nowhere to be found, because Ebbw Vale had one of the lowest rates of immigration in the country. So how did this happen? How did a town with hundreds of millions of pounds of EU funding vote to leave the EU because of immigrants that didn’t exist? In her emotive TED talk[17] [17] C. Cadwalladr, Facebook’s role in Brexit - and the threat to democracy. TED, 2019. , Carole shows images of some the adverts on Facebook, people were targeted with as part of the leave campaign (see Figure 1.5). They were all centred around a lie - that Turkey was joining the EU.

![Figure 1.5: Targeted disinformation adverts shown on Facebook[17].](01_Context/figures/Fig_Brexit.png)